SLAM and perception are core components of any autonomous platform, from small indoor robots to full-scale autonomous vehicles, enabling them to understand and navigate their surroundings. At AIRLab, we develop robust SLAM solutions tightly integrated with perception, allowing mobile platforms to localize accurately while incrementally building consistent representations of the environment – especially in challenging industrial, agricultural and urban scenarios.

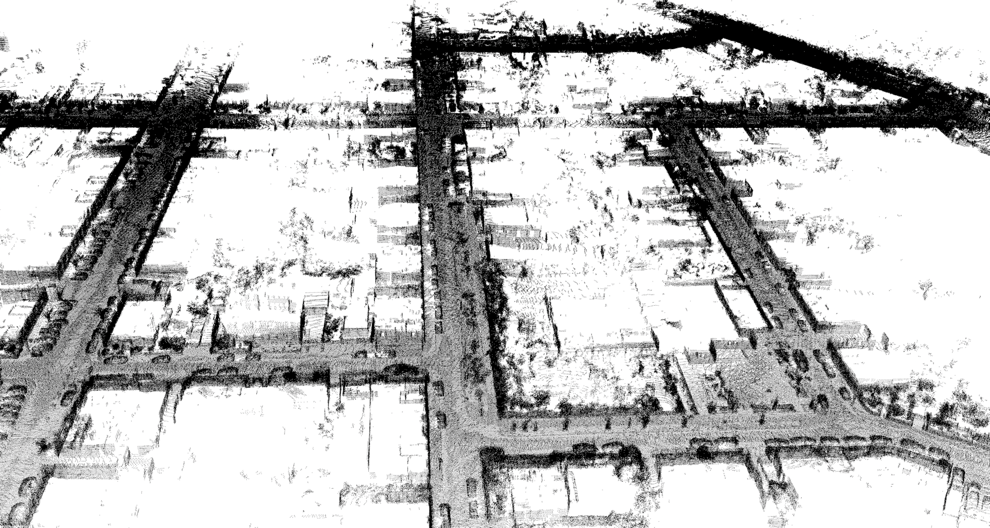

Our research focuses on improving localization, mapping and perception in different real-world conditions, such as dynamic, unstructured, or repetitive environments. By leveraging advanced sensor-fusion techniques that combine data from multiple sensors (e.g., LiDAR, cameras, IMU, GPS), our systems construct drift-resistant 3D maps and maintain real-time localization, laying the foundations for scalable reconstruction and reliable operation in both known and unknown settings.