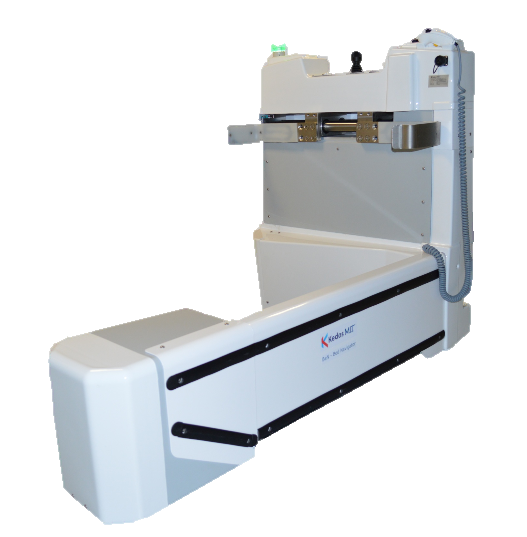

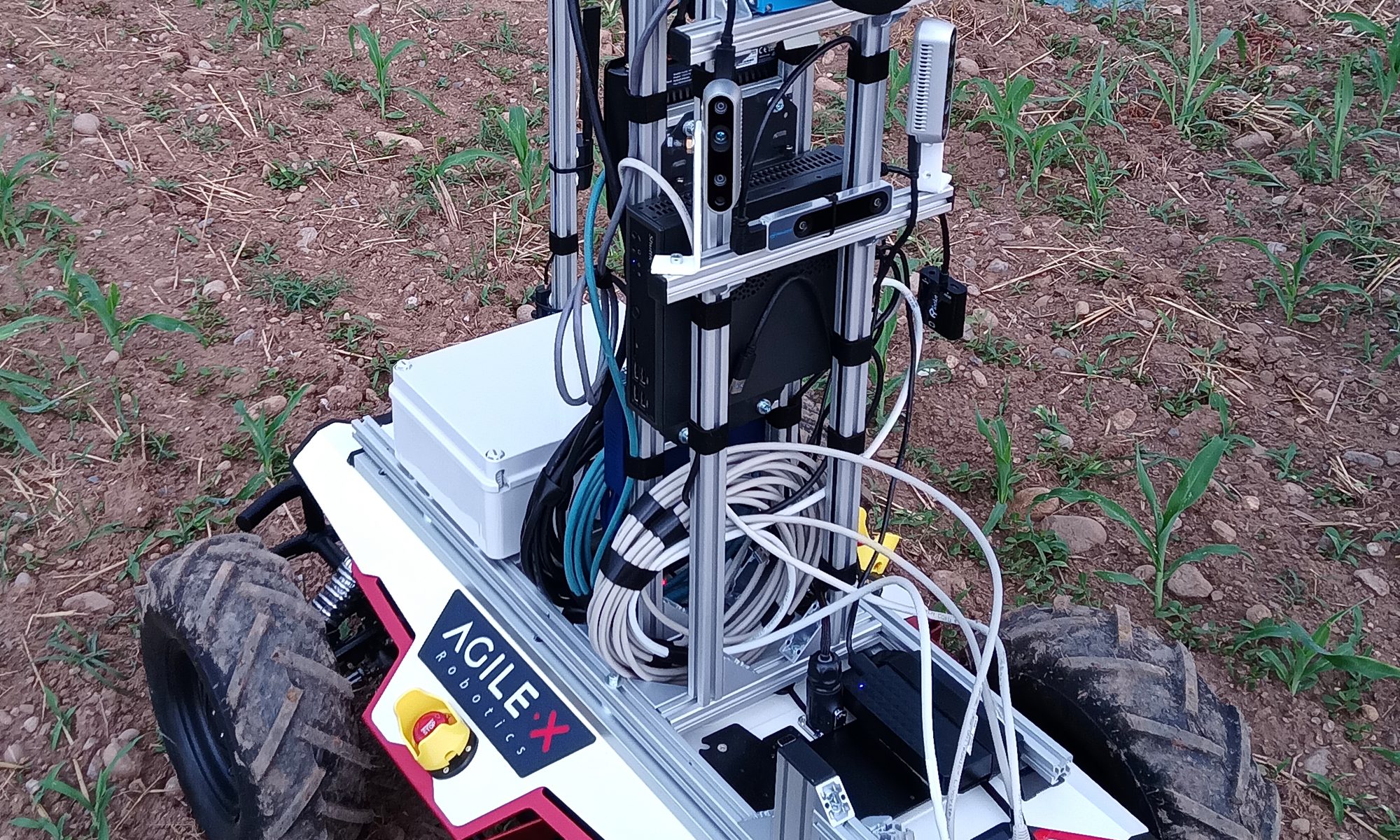

MADROB (Modular Active Door for RObot Benchmarking) and BEAST (Benchmark-Enabling Active Shopping Trolley) are benchmarks for autonomous robots aimed at measuring their capabilities and performance when dealing with devices that are common in human environments.

MADROB is focused on opening doors; BEAST considers the problem of pushing a shopping trolley. Both make use of a device with the same features of its real counterpart, fitted with sensors (to assess the actions of the robots on it: e.g., force applied to the handle of the door, precision in following a trajectory with the cart) and actuators (to introduce disturbances simulating real-world phenomena: e.g., wind pushing the door panel, stone under the trolley’s wheel).

Beyond the hardware and software, MADROB and BEAST also comprise procedures and performance metrics that enable objective evaluation of the performance of robots, as well as comparisons between different robots and between a robots and humans.

Contact: Matteo Matteucci

For additional details: http://eurobench2020.eu/developing-the-framework/modular-active-door-for-robot-benchmarking-madrob/, http://eurobench2020.eu/developing-the-framework/benchmark-enabling-active-shopping-trolley-beast/