Benchmarking means objectively measuring the performance of a robot when executing a task. Being able to benchmark robot systems is necessary to compare their performance, and thus to better understand their strenghts and weaknesses. Both research and industry need this to progress.

However, when dealing with autonomous agents benchmarking is tricky. How to devise testing procedures that yield objective results? What metrics capture the key points of the robot performance? How can robots that perform the same complex action differently be compared?

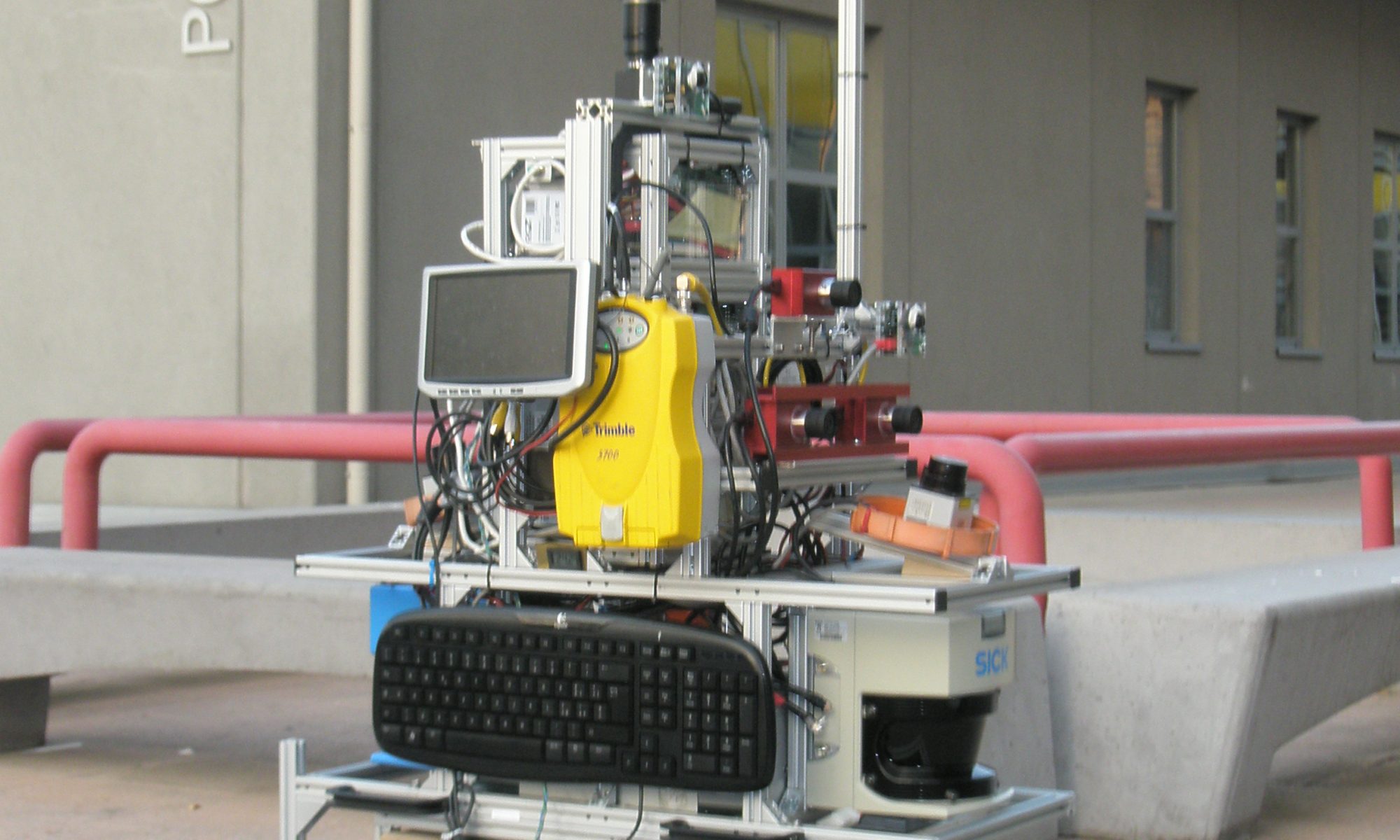

AIRLab has been working on these issues for a long time, accumulating experience in both methodology and real-world benchmark design, setup and execution.

Over the years, we participated -and are participating- to many European projects about robot benchmarking, including RAWSEEDS (FP6), RoCKIn (FP7), RockEU2 (H2020), RobMoSys (H2020), EUROBENCH (H2020), SciRoc (H2020), METRICS (H2020).

Contact: Matteo Matteucci