In regulated, highly variable settings, the supply of real-world data no longer grows at the pace demanded by modern models: collection is costly, consent is fragile, statistical drift is inevitable. The consequence is a bottleneck that constrains training, embeds representational bias, and exposes security gaps whenever heterogeneous sources are merged.

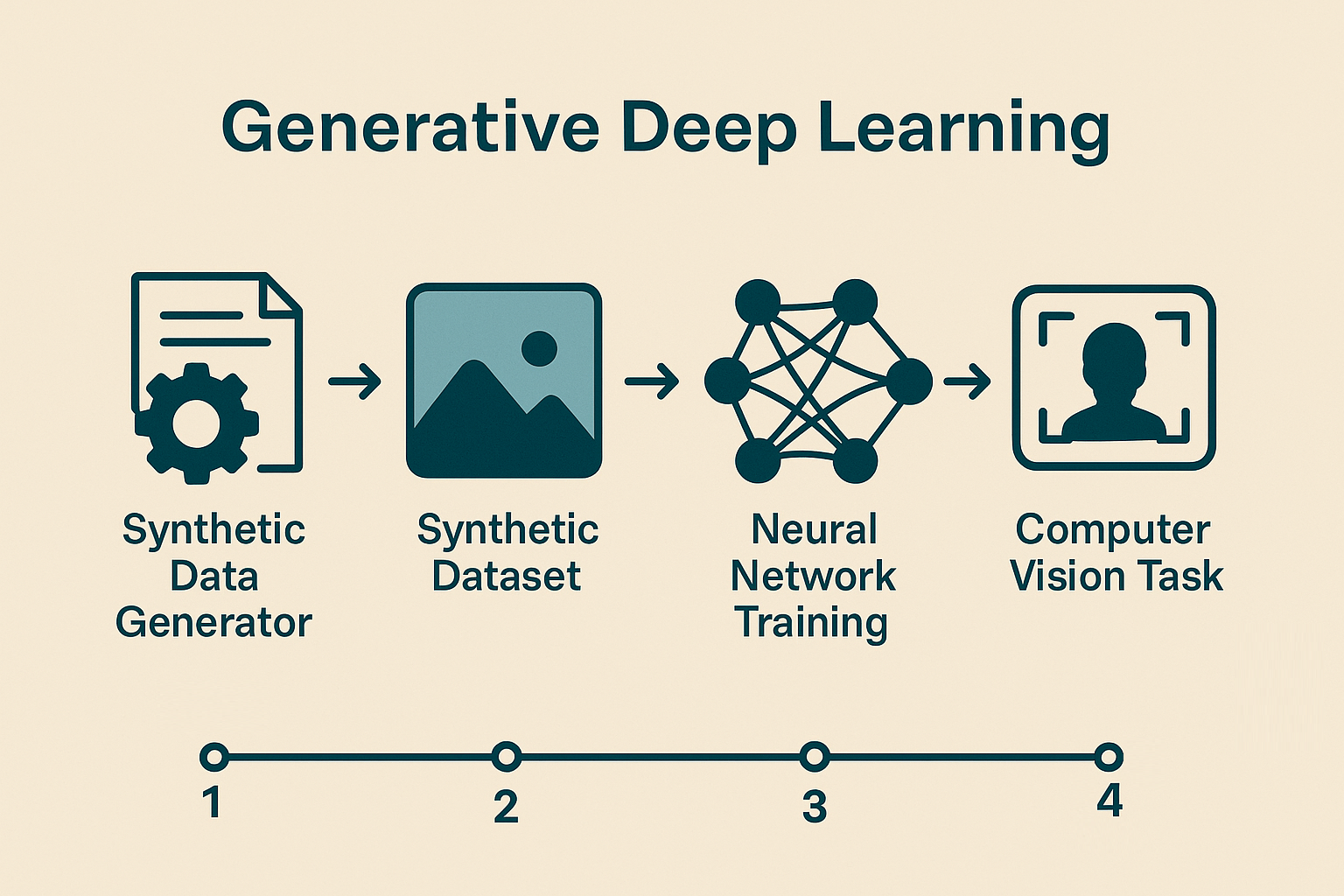

Research in generative deep learning addresses this impasse by turning generative models into full-scale data procurement infrastructures. The goal is not the occasional synthetic sample but entire, controlled datasets that evolve continuously with the target distribution. Iterative pipelines—where a discriminative model audits coverage, balance, and fidelity of the generated material and triggers targeted regeneration—create a just-in-time flow of licence-free, privacy-safe data dense enough to sustain both training and validation even in the complete absence of real instances.